Title: Alibaba HPN: A Data Center Network for Large Language Model Training

Authors: Kun Qian(Alibaba Cloud), Yongqing Xi, Jiamin Cao, Yichi Xu, Yu Guan, Binzhang Fu, Xuemei Shi, Fangbo Zhu, Rui Miao, Chao Wang, Peng Wang, Pengcheng Zhang, Xianlong Zeng, Eddie Ruan, Zhiping Yao, Ennan Zhai, Dennis Cai

Scribe: Mengrui Zhang (Xiamen University)

Introduction: Traditional cloud computing networks generate millions of continuous and steady flows with low utilization and hundreds of thousands of connections. In contrast, LLM training generates few but periodically bursty flows, reaching up to 400 Gbps, and each node generates only a few dozens of connections in LLM training. This traffic pattern discrepancy results in inefficient bandwidth utilization and severe load imbalances in LLM training. Additionally, the high sensitivity of LLM training to network failures necessitates a more reliable architecture. This paper introduces Alibaba High-Performance Network (HPN), a new network architecture for LLM training.

Key Idea and Contribution: HPN addresses the unique traffic patterns and reliability concerns of LLM training by introducing several innovative features:

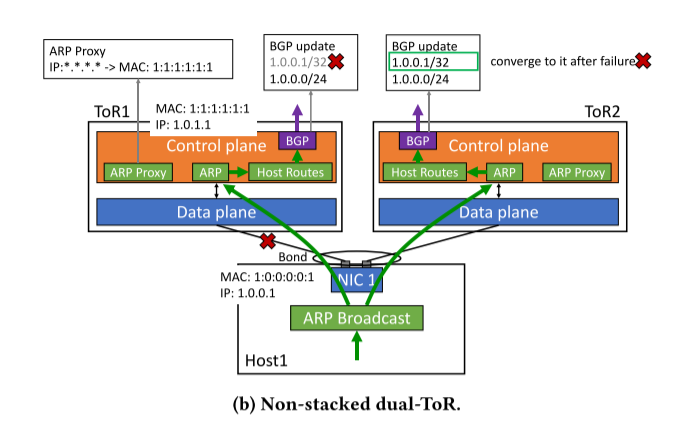

- Non-Stacked Dual-Tor Design: To avoid single-point failures, each link is connected to two independent Top-of-Rack (ToR) switches. Synchronization between the ToRs is achieved through an ARP broadcast module and configurable Link Aggregation Control Protocol (LACP). In addition, using BGP for the inter-segment routing.

-

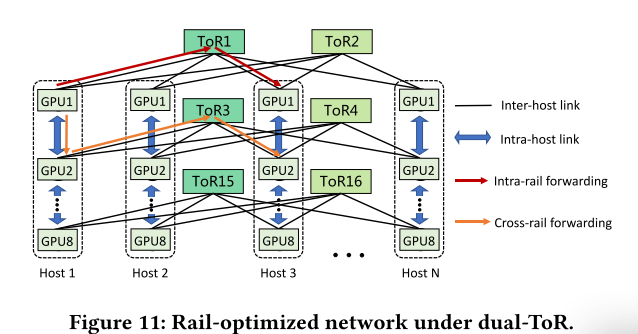

Real-Optimized Network: Utilizing 51.2TBps single-chip switches, HPN mitigates the stability risks associated with multi-chip switches and leverages intra-host bandwidth to connect GPUs efficiently.

-

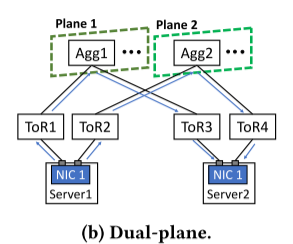

Dual-Plan Design: To eliminate hash polarization and ensure load balance, ToR switches are categorized into two separate groups. This design simplifies path selection, significantly reducing computation complexity and achieving even traffic distribution across ports.

Evaluation: HPN was evaluated in Alibaba Cloud’s production environment, demonstrating significant performance improvements. Training performance for three representative models( LLaMa-7B, LLaMa-13B, and GPT3-175B ) was enhanced by 6-14% using HPN, and reliability tests showed that link failures in a dual-Tor topology only caused a 6% performance degradation, compared to complete halts in single-Tor setups. These results are significant because they validate HPN’s ability to handle LLM training’s demanding requirements, offering a scalable, high-performance, and reliable solution for future data center networks

Q1: I have a question regarding HPN performance. I think you have a slide comparing HPN with traditional DCNs on an end-to-end basis, showing a 6 to 10% performance improvement. I’m curious if you have a study detailing the exact sources of this improvement. One explanation might be better load balancing due to using only two tiers. Do you have a definitive answer for this? Additionally, if load balancing can be improved in traditional DCNs, do you still see HPN as essential for end-to-end performance improvement?

A1: The performance improvement primarily stems from two factors: hash polarization and the dual-plane design. Hash polarization is a key element, and the dual-plane design effectively addresses load imbalance. In traditional DCNs with a similar topology, we used a dual-torus design but did not implement the dual-plane concept, which led to significant performance degradation. These two factors are crucial for the performance gains we observed.

Regarding the second question, even with HPN deployment, we still face challenges such as larger training sizes and increased power requirements. Layer 3 traffic remains necessary for coordination. To address these issues, we are exploring proactive path selection and other solutions to enhance load balancing and overall performance.

Personal Thoughts: The non-stacked dual-ToR design and dual-plan topology effectively tackle both performance and reliability issues, offering a substantial improvement over traditional network architectures. HPN sets a high standard for network architecture in large-scale cloud environments and offers valuable insights for future developments.