Title: Dissecting Carrier Aggregation in 5G Networks: Measurement, QoE Implications and Prediction

Speaker : Eman Ramadan (University of Minnesota – Twin Cities)

Scribe : Yuntao Zhao (Xiamen University)

Authors: Wei Ye, Xinyue Hu, Steven Sleder (University of Minnesota – Twin Cities); Anlan Zhang (University of Southern California); Udhaya Kumar Dayalan (University of Minnesota – Twin Cities); Ahmad Hassan (University of Southern California); Rostand A. K. Fezeu (University of Minnesota – Twin Cities); Akshay Jajoo, Myungjin Lee (Cisco Research); Eman Ramadan (University of Minnesota – Twin Cities); Feng Qian (University of Southern California); Zhi-Li Zhang (University of Minnesota – Twin Cities)

Introduction:

The paper presents an in-depth measurement study of Carrier Aggregation (CA) deployment in commercial 5G networks within the United States. It confirms the role of CA in significantly enhancing throughput and unveils the complex dynamics of diverse frequency band combinations in real-world scenarios. The paper meticulously measures and quantifies the impact of CA, shedding light on the challenges it introduces in network performance analysis and the implications for Application Quality of Experience (QoE).

Building upon these insights, the paper discusses key factors that influence the deployment and effectiveness of CA. It identifies the need for a predictive framework that is cognizant of CA’s intricacies. To this end, the authors introduce Prism5G, a novel deep-learning model explicitly designed to embrace the complexities of CA. Prism5G is distinguished by its ability to model individual Component Carriers (CCs), monitor signaling events, and fuse learning to capture the interactive correlations between CCs in CA configurations.

Through comprehensive evaluations, the paper demonstrates Prism5G’s superiority over existing throughput prediction algorithms, with an average improvement of over 14% in prediction accuracy and up to 22% in specific cases. The results highlight the effectiveness of Prism5G in harnessing the benefits of CA to enhance application performance and QoE.

Key idea and contribution:

Based on the comprehensive measurement and analysis, the paper distills two fundamental requirements for accurate throughput prediction in CA scenarios:

-

Need for Modeling Each Component Carrier (CC) Separately:

Recognizing the heterogeneity of 5G channels, the paper underscores the necessity of treating each CC as an individual entity with unique characteristics. -

Need for Accounting for Complex and Dynamic Feature Interplay:

The paper highlights the complex interactions between various radio parameters across different bands, emphasizing the importance of considering these dynamics for effective throughput prediction.

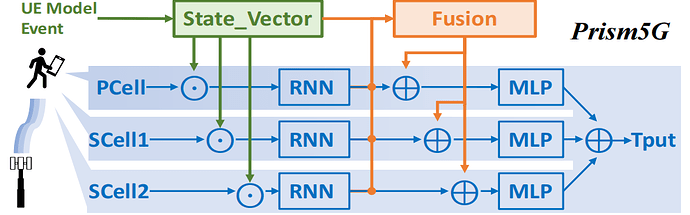

Building upon the identified needs, the paper introduces Prism5G, a CA-aware deep learning framework tailored for 5G throughput prediction, as illustrated in the figure below. The model is designed with the following core components:

-

Modeling of Each CC Separately: Prism5G employs a weights-shared neural network for each CC, allowing for fine-grained throughput prediction and enhancing the model’s adaptability to varied network conditions.

-

Monitoring the Signaling Events: The framework translates Radio Resource Control (RRC) signaling events into actionable insights, adjusting the states of carrier components in response to network changes.

-

Fusion Learning for Feature Interplay: Prism5G leverages fusion learning to capture the complex correlations among different channels, considering the current CA state for a holistic prediction.

-

Joint Training: All modules of Prism5G are trained collectively, ensuring that the model learns to predict aggregated throughput by integrating individual CC predictions and their interactions.

Evaluation:

Performance Assessment

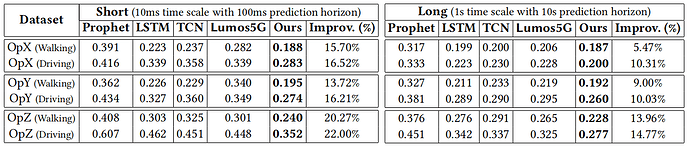

The evaluation of Prism5G’s performance is presented in the table below, which offers a comparative analysis of its accuracy against several baseline models, measured through the root-mean-square-error (RMSE) on collected 5G network traces. The experiments were designed to simulate real-world conditions, employing two different time scales: a short period with a 10 ms time scale and a 100 ms prediction horizon, and a long period with a 1 s time scale and a 10 s prediction horizon.

The results from these experiments clearly demonstrate Prism5G’s superiority over the current best baseline models. On average, Prism5G achieves a 14% reduction in RMSE, with a maximum improvement of 22%. This consistent outperformance across both time scales indicates Prism5G’s robustness and adaptability to varying network conditions. Moreover, the results highlight a significant drawback of purely time series prediction algorithms, such as Prophet, which exhibit the highest RMSE and are outperformed by Prism5G in nearly all datasets. This suggests that traditional algorithms struggle to capture the nuances of 5G network dynamics, particularly those introduced by Carrier Aggregation.

Impact on QoE

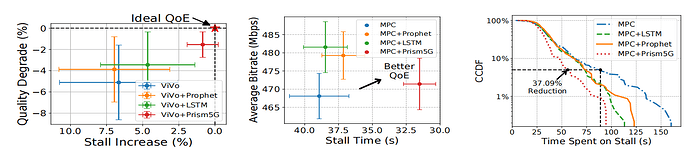

The evaluation also delves into the practical implications of Prism5G’s enhanced predictive capabilities on application performance, specifically focusing on the Quality of Experience (QoE). The paper presents two use cases to illustrate this impact: the integration of Prism5G with an immersive XR application (ViVo+Prism5G) and a video streaming Adaptive Bit Rate (ABR) algorithm (MPC+Prism5G).

-

XR Immersive Content Delivery: The paper discusses the application of Prism5G in enhancing the QoE of ViVo, an XR application. By leveraging Prism5G’s fast and accurate throughput predictions, ViVo+Prism5G is able to make near-optimal decisions regarding the quality level of 3D frames, significantly improving the user experience. The results indicate that ViVo+Prism5G attains QoE metrics very close to those of an ideal scenario, outperforming alternatives like ViVo+Prophet and ViVi+LSTM.

-

UHD Video-on-Demand Streaming: In the context of video streaming, the paper evaluates the performance of Prism5G in conjunction with an ABR algorithm, MPC. The integration of Prism5G with MPC results in significant improvements in streaming quality, evidenced by a modest increase in average bitrate and a substantial reduction in average stall time. Notably, Prism5G’s integration also leads to substantial improvements in the tail performance of stall times, a critical metric for user satisfaction during streaming.

-

Tail Performance: The paper further investigates tail performance, which is a measure of the worst-case scenario experience for users. The results show that MPC+Prism5G significantly outperforms other models in reducing the time spent on stalls, particularly during transition periods when there are significant changes in network conditions due to CA.

Q1: I’m curious about the mechanism by which carrier aggregation is activated on mobile phones. Is it dependent on the type of application being used? For instance, when I use my phone for routine web browsing, like opening Facebook, will carrier aggregation be activated in such cases, or do you have any insights on how it’s triggered?

A1: The activation of carrier aggregation isn’t under our direct control. What we can do is lock the band to a specific frequency, which allows us to gather information about the carrier aggregation from the physical layer. This means we can observe the status of carrier aggregation and its impact on network performance, but we don’t have the ability to dictate when it’s activated by the mobile device based on application usage. The actual activation of carrier aggregation is determined by the network conditions and the mobile operator’s configuration, not by the specific applications being used on the phone.

Q1: I see. It’s interesting because carrier aggregation will definitely consume more energy, right? And for applications like web browsing, there are times when you don’t need that much throughput. I wonder if mobile operators activate carrier aggregation based on the type of data being sent. For instance, will they activate carrier aggregation only when there’s a high volume of data transmission, or do they use it whenever possible, even if the application doesn’t require that much bandwidth? I think it’s worth investigating, and it’s quite interesting.

A1: The activation of carrier aggregation by mobile operators is not solely based on the application’s immediate need for bandwidth. It’s more about configuring the network to optimize performance and resource allocation. Oftentimes, they may configure you to have more cells available, but not all cells may be transmitting data simultaneously, depending on various factors including the amount of data buffered and the current network conditions. The use of carrier aggregation is part of a broader strategy to enhance the user experience by potentially increasing data rates, but it’s also balanced against energy efficiency and other operational considerations.

Q2: How did you measure the quality degradation in your study related to carrier aggregation?

A2: We measured quality degradation using metrics focused on the user’s experience, particularly the average quality level of delivered content and the average stall time, which indicates interruptions in playback. This approach allowed us to assess the impact of carrier aggregation on the quality of experience without relying on a single metric like SSIM.

Personal thoughts

The paper contributes to the field of 5G network performance analysis and prediction, with a particular focus on the complexities of Carrier Aggregation. I am impressed by the depth of the measurement study and the thoughtful architecture of the Prism5G framework. The authors have adeptly identified and addressed the intricate factors at play in CA deployments, culminating in a solution that significantly enhances throughput prediction accuracy over existing methodologies.

However, the paper also raises a pertinent issue regarding the trade-off between the improved accuracy of Prism5G and the increased computational overhead it entails. Specifically, Prism5G introduces an average of 34.1% more training time and 23.2% more inference time compared to LSTM. This additional overhead could impact the model’s efficiency in scenarios that demand iterative training or real-time predictions, potentially limiting its applicability. Therefore, future work could further explore optimization in this area.