Title: Harnessing WebRTC for Large-Scale Live Streaming

Authors: Wei Zhang, Tong Meng, Xianhua Zeng, Wei Yang, Changqing Yan, Chao Li, Chenguang Li, Feng Qian(ByteDance); Junfeng Yang(Hunan University of Technology and Business); Lei Zhang(Shenzhen University); Zhi Wang(SIGS, Tsinghua University)

Speaker: Wei Zhang

Scriber: Yining Jiang

Introduction

The paper tackles the challenge of achieving ultra-low latency for large-scale live streaming, which is essential for interactive scenarios like e-commerce flash sales and live sports. Existing solutions, notably HTTP-FLV over TCP, had been optimized to around 5s latency at Douyin but could not break further without severe rebuffering. Reducing pre-buffering directly caused unacceptable video stalls, revealing the fundamental limits of TCP-based protocols. WebRTC, designed for real-time communication with sub-second latency, seemed promising, but naïve deployment degraded user engagement due to issues like first-frame delay, CPU overhead, and AV drift.

Key Idea and Contribution

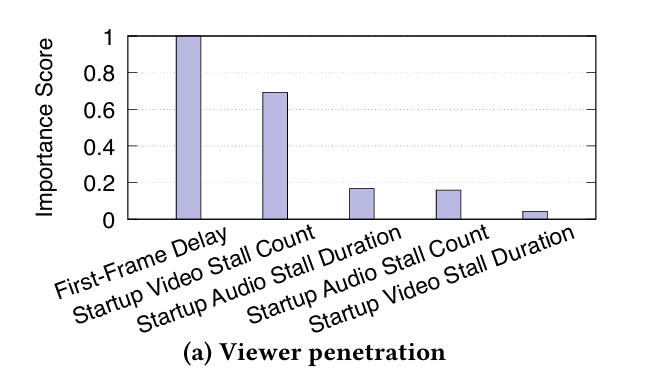

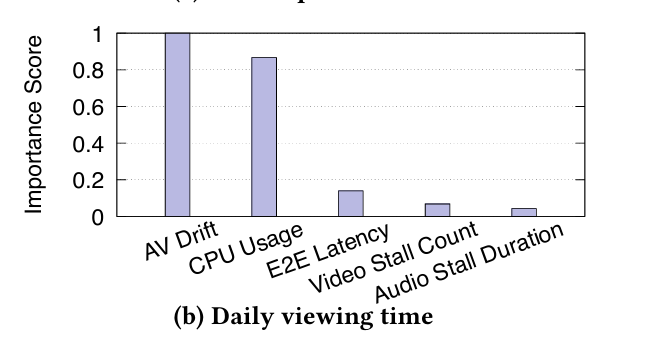

The authors designed RTM (Real-Time Media), a WebRTC-based streaming system customized for billion-scale live broadcasts. Their key insight was to optimize four QoE metrics most strongly tied to user engagement—first-frame delay, startup video rebuffering, AV drift, and CPU usage—rather than latency alone.

The main contributions are that:

-

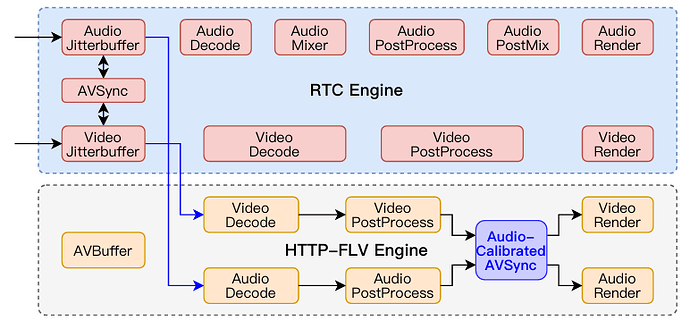

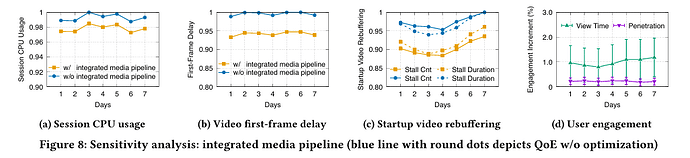

Integrated media processing pipeline – Reusing long-lived codecs and simplifying redundant RTC blocks to cut CPU overhead and first-frame delay.

-

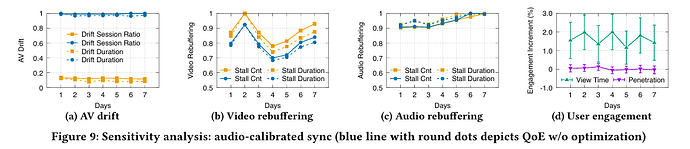

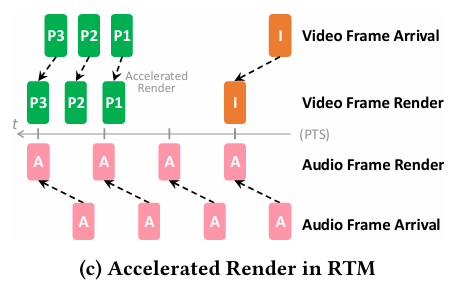

Audio-calibrated AV synchronization – Calibrating video rendering to audio rather than dropping frames, achieving faster AV sync without harming smoothness.

-

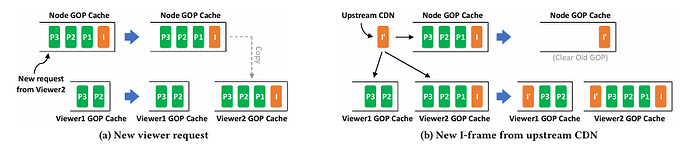

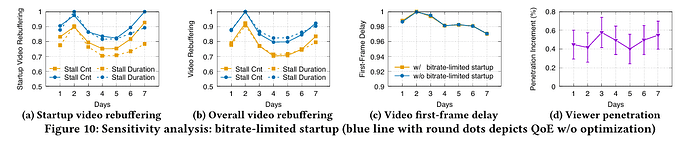

Bitrate-limited startup pacing – Controlling CDN burst rates to prevent startup rebuffering under cached GOP delivery.

-

Lightweight SDP negotiation – Compressing and merging signaling to reduce handshake overhead.

These optimizations, validated through billions of A/B test sessions, converted WebRTC’s latency advantage into measurable engagement and revenue gains.

Evaluation

RTM reduced end-to-end latency by 54.5% over HTTP-FLV and improved startup rebuffering duration by >12%, while viewer penetration and daily viewing time rose 0.03% and 0.39%, respectively—enough to yield 0.8% more paid orders, representing billions in revenue for Douyin. Case studies during the FIFA World Cup and Asian Games showed Douyin’s streams ran 3.5–31 s faster than competitors. These results are significant because they prove RTM can increase user penetration and user viewing time, which have been proven by the Douyin team in real-world tests to be closely related to commercial revenue. Therefore it is proven that RTM could be scaled to billions of sessions for commercial live streaming, transforming low-latency streaming from a research promise into an operational success.

Q&A

Q1: What kind of bitrate adaptation do you use for this work, and have you considered adopting TLadder in this real-time delivery framework?

A1: Probably they can be combined. I am pretty sure.

Q2: What is the current ABR algorithm that you’re using?

A2: Currently, we are working on the ABR algorithm, and probably the TLadder can be used.

Personal Thoughts

I believe this paper presents very realistic application scenarios. It adapts the protocol based on the characteristics of live streaming and users’ feedback. The detailed deployment insights (e.g., CDN coordination, fallback to HTTP-FLV for unsupported networks) make this a rare real-world case study at Douyin’s scale. The paper convincingly demonstrates RTM’s viability for global-scale interactive streaming and provides actionable lessons for both industry and research.