Title : Lessons learned from building Software-Based Networks and Networking for the Cloud

Speaker : K. K. Ramakrishnan (University of California, Riverside)

Scribe : Xing Fang (Xiamen University), Chengjin Zhou (Nankai University)

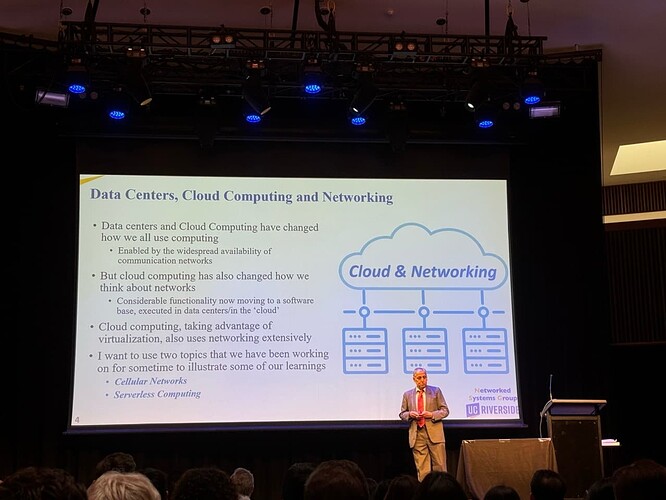

Introduction

Communication networks have become increasingly software-based, especially with the use of Network Function Virtualization (NFV) to run network services in software and networking virtualized components in the cloud. These talks focus on Professor Ramakrishnan’s recent research on applying these techniques in cellular networks and serverless computing.

Cellular networks

Influenced by the widespread availability of networking technologies and the necessity for high performance and flexibility in handling various network functions, cellular networks have evolved from purpose-built hardware applications to a virtualized, software-based infrastructure. The key challenge of existing cellular networks is the increased control plane latency in the 5G cellular core.

To address this, L25GC (SIGCOMM 22) and L25GC+ (IEEE CloudNet 23) were proposed, developing a more performant implementation of the 5G cellular core. The key idea is simplifying the service-based interface by using shared memory for communication between NFs in a 5GC unit on the same node and consolidating NFs on the same node. Evaluation results show that shared memory significantly reduces communication latency due to zero-copy access and complete user space.

Although L25GC/L25GC+ improves QoE for user applications, latency problems persist due to the tight interaction between the control and data planes, causing numerous protocol message exchanges. Consequently, instead of proposing a better system implementation, an innovative software-based and properly structured architecture for cellular networks named CleanG (TON 22) was proposed. With the elastic scalability offered by NFV, experiment results suggest that control latency can be significantly reduced.

The research emphasizes the importance of thinking holistically about protocols and system implementations. It is crucial to design protocols that are not merely simple re-implementations of legacy systems but are optimized for the current and future technological landscape.

Serverless computing

Serverless computing is one of the fastest-growing offerings in the cloud, operating on the function-as-a-service model. This model allows users to focus on developing their applications while the cloud service provider manages the underlying infrastructure. In a typical serverless infrastructure using Knative, user messages arrive at the ingress gateway, pass through a message broker, and are then sent to functions via sidecar containers.

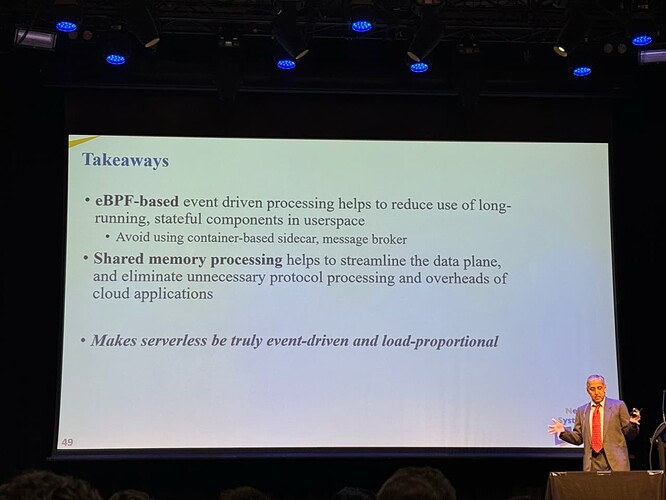

However, stateful and continuously running components in user space, such as message brokers and sidecar containers, as well as kernel-based networking, can introduce processing overhead and reduce efficiency. To address these issues, a lightweight serverless function chain called SPRIGHT has been proposed. SPRIGHT leverages eBPF sidecars instead of traditional sidecar containers to offload stateful processing to the kernel. It uses shared memory processing to reduce data movement overhead and employs direct function routing to simplify inter-function invocations.

In summary, eBPF-based event-driven processing helps eliminate long-running, stateful components in user space, while shared memory processing streamlines the data plane and removes unnecessary protocol processing and overheads in cloud applications. Together, these innovations make serverless computing truly event-driven and load-proportional.

Summary

Networks are becoming more software-based and offer opportunities to improve flexibility and performance. For cellular networks, we need to take the opportunity to rethink the protocols we use. For cloud-based applications, using networking primitives is key to achieving good performance and resource efficiency.

Questions

Q1: Thoughts on programmability in cellular networks and its impact on protocol complexities and message exchanges.

A1: It is suitable for the data plane in cellular network implementation. However, the core complexities of the control plane remain, limiting significant benefits beyond faster forwarding.

Q2: In the talk, the motivation for using new virtualized techniques is improving performance metrics. How about metrics such as maintenance and automation?

A2: Much work has been done for automation. Nowadays, eBPF is used by many and is simple enough to utilize.

Q3: Thoughts on new abstractions like the recent changes in hardware and system design impacting protocol implementation.

A3: New abstractions can make protocol implementations more flexible and programmable. However, protocol specifications are still necessary for proper interaction with various devices.

Q4: Short-running functions and the potential to run them long-term in serverless computing.

A4: The design aims to eliminate heavy components, allowing functions to run as needed without restrictions and avoiding forced starts and stops.

Q5: Engagement with standards bodies and their governance structures.

A5: Standards bodies are necessary for consensus but tend to complicate matters. Simpler ideas often struggle in these environments, but simplifying standards is essential for progress.

Q6: Reducing the complexity of protocol design considering new software implementations.

A6: There are many ways to consider fundamental aspects of protocol design.

Q7: Using shared memory and its potential issues like access control and privacy leakage.

A7: Addressing these issues involves using proprietary rules and obfuscation techniques to ensure proper access control and privacy in shared memory environments.

Q8: Can you share your experiences and lessons learned from the obstacles and dead-ends you encountered?

A8: The speaker emphasized the freedom in academia to pursue simplicity, contrasting it with the complexity often added in industry. He shared his 1983 experience with ATM protocols, which started simple but became complicated due to additional features. He stressed the importance of avoiding unnecessary complexity and focusing on simplicity and effective management, especially as modern network devices become more capable and centralized in data centers.

Personal thoughts

I fully agree that rethinking protocols and maintaining simplicity are essential. However, practical challenges often hinder these ideals. For example, BGP’s complexity has grown due to business policies, security concerns, and scalability needs, making it difficult and costly to replace. Additionally, real-world scenarios demand flexible and robust protocols that can handle diverse and dynamic environments, which often leads to increased complexity. Thus, while simplicity is important, achieving it in practice involves balancing it with necessary complexities.