Title: Lessons Learned from Operating a Large Network Telescope

Authors: Alexander Männel(TU Dresden), Jonas Mücke(TU Dresden), kc claffy(CAIDA/UC San Diego), Max Gao(UC San Diego), Ricky K. P. Mok(CAIDA/UC San Diego), Marcin Nawrocki(NETSCOUT), Thomas C. Schmidt(HAW Hamburg),Matthias Wählisch(TU Dresden)

Scribe: Mengrui Zhang(Xiamen University)

Introduction

This paper studies the operational challenges of running a large network telescope, a passive measurement infrastructure that collects unsolicited Internet traffic (IBR). Network telescopes are crucial for research on security, censorship, and global Internet dynamics, but their reliability is often taken for granted. With IPv4 scarcity, increasing address space leasing, and massive data volumes, existing telescopes such as the UCSD Network Telescope (UCSD-NT) face serious sustainability and data integrity challenges. Existing tools and systems do not adequately monitor these issues, which risks biasing research results.

Key Idea and Contribution

The authors document their lessons learned from operating UCSD-NT, the largest and longest-running network telescope. Their key contributions include:

- Operational analysis: They describe how UCSD-NT works within the AMPRNet /8 block and highlight the increasing complexity of filtering out active leased prefixes while preserving dark space.

- Validation methods: They introduce a technique to validate telescope coverage and packet loss by leveraging third-party scanning projects (e.g., AlphaStrike and Leitwert). This provides ground truth without injecting new traffic, enabling both operators and researchers to detect missing traffic or misconfigurations.

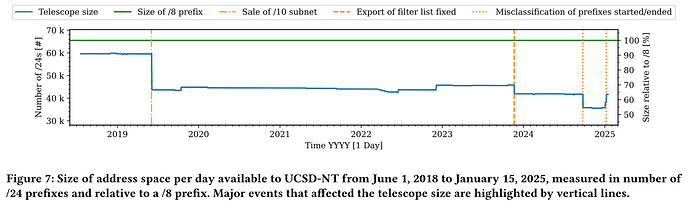

- Longitudinal study: They track UCSD-NT’s size from 2018–2025, showing shrinkage due to address sales, new leases, and filter list errors. Even small changes in telescope prefixes can cause synthetic signals or alter research conclusions (e.g., QUIC backscatter observations).

- Operational insights: They analyze overload events where packet loss occurred at >2.6M packets/sec, and show that path-level issues (e.g., BGP misconfigurations, intra-domain loss) also distort data.

- Guidelines: They propose concrete best practices for both operators (monitor BGP, system metrics, document incidents) and data consumers (consider telescope size changes, validate integrity against ground truth).

Evaluation

The evaluation combines longitudinal data analysis (6 years) with validation against scanning projects. Results show that UCSD-NT’s usable address space dropped from nearly a /8 to ~63.5% due to sales, leases, and filtering changes. They confirmed packet loss both in overload events and due to routing problems outside UCSD’s control. Importantly, they demonstrate that even small prefix allocation changes can drastically shift observed traffic patterns.

Q&A

Q1: Does selling part of the telescope address space reduce its quality, and how small can a telescope be while still useful? Would RPKI eliminate the need for telescopes?

A1: Telescope size changes can create synthetic effects and bias results, but the authors did not deeply study the impact of smaller telescopes or targeted prefixes. RPKI helps mitigate hijacks, but telescopes still provide unique visibility into IBR.

Q2: Much of the validation seems manual — can this be automated?

A2: Yes. The authors acknowledge that validation should be automated to continuously monitor telescope health and provide timely information to researchers. They are working on automating these processes.

Personal Thoughts

I like that this paper bridges infrastructure operations with research integrity. Often, measurement papers assume telescope data is “clean,” but this study proves that operational quirks (filter list updates, prefix leases, overload) can heavily bias results. I also appreciate their pragmatic approach: instead of introducing new probing traffic, they cleverly repurpose existing scanning projects as ground truth.