Title: NetLLM: Adapting Large Language Models for Networking

Authors: Duo Wu, Xianda Wang, Yaqi Qiao (The Chinese University of Hong Kong); Zhi Wang (Tsinghua University); Junchen Jiang (The University of Chicago); Shuguang Cui, Fangxin Wang (The Chinese University of Hong Kong)

Speaker: Duo Wu (The Chinese University of Hong Kong)

Scribe: Yuling Lin (Xiamen University)

Introduction

The problem studied in the paper revolves around the challenge of effectively integrating the capabilities of large language models (LLMs) into the domain of networking. This is an important and interesting problem because networking tasks, which involve complex prediction and system optimization, can potentially benefit significantly from the vast pre-trained knowledge and powerful inference abilities of LLMs. However, existing systems and tools are not designed to leverage the strengths of LLMs in this context. The limitations of current deep learning-based algorithms in networking include high model engineering costs due to task-specific DNN design and poor generalization performance on unseen data distributions or environments. Moreover, the input modalities typically used in networking tasks differ substantially from the plain text inputs that LLMs are designed to handle, creating a significant gap that prevents direct application. Additionally, the answer generation process of LLMs, which is based on predicting tokens one by one, can lead to inefficiencies and inaccuracies when applied to networking tasks that require rapid and reliable responses. The paper addresses these challenges by proposing the NetLLM framework, which aims to adapt LLMs to efficiently and effectively solve networking problems while maintaining strong generalization capabilities.

Key idea and contribution

The key idea explored in the paper is the innovative adaptation of large language models (LLMs) to address the complex tasks within the networking domain. The authors have dedicated their efforts to developing the NetLLM framework, which stands as the first unified and efficient approach to tailoring LLMs for various networking tasks. This framework is designed to overcome the substantial challenges associated with integrating LLMs into networking, such as the disparity in input modalities, inefficiency in answer generation, and high adaptation costs due to the extensive parameter size of LLMs.

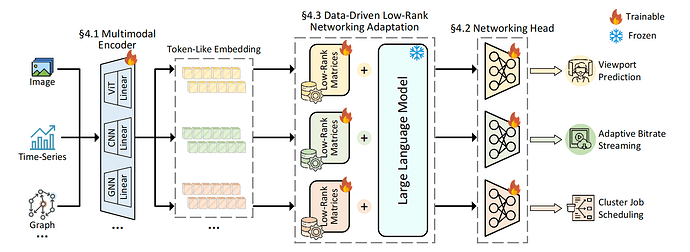

NetLLM is designed to overcome the practical challenges of integrating LLMs into networking tasks by introducing three main components: a multimodal encoder to process diverse input data, a networking head for generating valid and task-specific answers, and a data-driven low-rank networking adaptation (DDLRNA) scheme to reduce adaptation costs. The multimodal encoder translates various input modalities into a format compatible with LLMs, the networking head ensures that the answers generated are both valid and efficient, and the DDLRNA scheme optimizes the fine-tuning process for acquiring domain-specific knowledge. This framework is significant because it not only allows for the utilization of LLMs’ extensive knowledge base but also ensures that the adapted models perform well across a variety of networking tasks, including viewport prediction, adaptive bitrate streaming, and cluster job scheduling. The authors’ work on NetLLM demonstrates a promising direction for the future of networking algorithms, where a single model can be adapted for multiple tasks with reduced engineering effort and enhanced performance.

Evaluation

The evaluation of the NetLLM framework was conducted across three distinct networking tasks—viewport prediction (VP), adaptive bitrate streaming (ABR), and cluster job scheduling (CJS)—to systematically assess its performance and generalization capabilities. The results demonstrated that NetLLM-adapted LLMs significantly outperformed existing state-of-the-art algorithms, with improvements ranging from 10.1-36.6% in mean absolute error for VP, 14.5-36.6% in Quality of Experience scores for ABR, and 6.8-41.3% in job completion time for CJS. Notably, the framework also showcased superior generalization performance in environments with settings different from those used in training. This result is significant because it indicates that NetLLM can effectively harness the power of LLMs to tackle networking tasks with high reliability and efficiency, while also being capable of adapting to new, unseen scenarios. The implications of this work are profound for the networking community, as it presents a sustainable and unified approach to algorithm design that can reduce engineering overhead and enhance the robustness of network systems in real-world deployments.

Q: As the number of specialized tasks increases, both the engineering effort to design models and the data complexity for those models also increase linearly. The challenge with large language models (LLMs) needing to learn different distributions and features for different tasks, which could lead to data complexity growing due to the “curse of dimensionality.” How this issue is addressed or viewed in the context of developing a universal model for networking tasks?

A: In fact, we are moving forward with the integration of Large Language Models (LLMs) into networking. Our primary motivation for this integration is the reduction of model engineering overhead. Previously, with DL-based approaches, we were required to initially design a DNN model, the architecture of which was crucial and often complex. This complexity drove us to continually design increasingly intricate models to achieve better performance. However, due to the nature of DNN models, the design process typically unfolded in a trial-and-error environment, which could be both time-consuming and tedious. To transform this situation, we are leveraging LLMs as a foundational solution. By using LLMs, we aim to streamline the process, making it more efficient and less labor-intensive, while still maintaining high performance across a variety of networking tasks.

Q:1. You mentioned that for training an LLM using reinforcement learning, an existing policy is required to bootstrap the process. Could you specify what the existing policy is that is used for this initial training phase?

2. I’m interested in understanding the runtime complexity of the LLM approach. Is it feasible to run this model directly on the device, or does it require the computational power of a server?

A:1. In our experiments, we utilize GENET, an enhanced iteration of the Pensieve model, as our existing policy. This policy serves as the foundation for generating a clear and concise experience dataset, which is instrumental for fine-tuning our models.

2. To assess the performance, we conducted our experiments on 40GB A100 GPUs setup. The results indicate that the runtime for a 7-billion-parameter model is less than half a second, demonstrating a highly efficient processing capability. When scaling down to a 1-billion-parameter model, the runtime further reduces to just a few milliseconds, showcasing the potential for real-time applications. However, to deploy these models at the network’s edge, additional work is required. Thank you for your interest, and we look forward to sharing more advancements in this area.

Q: Are there any benchmarks available for assessing the performance of the NetLLM framework on various network tasks? Additionally, are there guidelines that can help us determine which network tasks are best suited for application with Large Language Models?

A: Currently, there is no dedicated benchmark for assessing LLMs on networking tasks. We’ve categorized these tasks into two main types: prediction tasks, which are often tackled by supervised learning, and decision-making tasks, addressed by reinforcement learning. Our NetLLM framework is designed to be versatile, capable of handling both types of tasks. If your networking task falls into either category, NetLLM can be effectively applied.

Personal thoughts

The paper presents a compelling approach to integrating large language models into the realm of networking, addressing a gap in the current literature. The comprehensive evaluation across different networking tasks and the clear demonstration of superior performance over existing algorithms are noteworthy. The idea of a unified framework that can adapt a single LLM to various tasks is particularly powerful, as it suggests a move towards more generalized and cost-effective solutions in networking.

There are open questions and potential areas for further exploration. For instance, while the paper discusses the adaptation of LLMs to networking tasks, the extent to which these models can be adapted to other domains or how they might handle evolving and more complex networking scenarios over time is not fully explored. Additionally, the paper mentions the use of multimodal LLMs but finds that they do not necessarily outperform single-modal LLMs in networking. This raises questions about the role and optimization of multimodal capabilities in this context.