Title : Pegasus: A Universal Framework for Scalable Deep Learning Inference on the Dataplane

Authors : Yinchao Zhang, Su Yao, Yong Feng (Tsinghua University); Kang Chen (Tsinghua University & Beijing National Research Center for Information Science and Technology); Tong Li (Renmin University of China); Zhuotao Liu (Tsinghua University & Zhongguancun Laboratory); Yi Zhao (Beijing Institute of Technology); Lexuan Zhang, Xiangyu Gao (Tsinghua University); Feng Xiong (Beihang University); Qi Li (Tsinghua University); Ke Xu(Tsinghua University & Zhongguancun Laboratory)

Scribe:Xuanhao Liu(Xiamen University)

Introduction

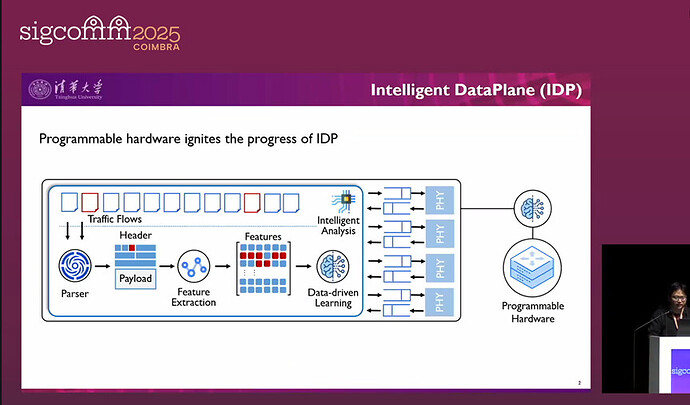

The core problem studied in this paper is how to efficiently and universally execute deep learning (DL) model inference on the dataplane of commercial programmable switches. This problem is important and interesting because embedding intelligent models directly on the dataplane (i.e., Intelligent DataPlane, IDP) enables real-time, intelligent traffic analysis at line-speed, such as malicious traffic detection and fine-grained traffic classification, which is crucial for coping with increasingly complex network environments. However, existing systems and tools fall short in solving this problem. The dataplane is primarily designed for high-speed packet processing, and its core abstraction, the Match-Action Table (MAT), is fundamentally mismatched with the numerous complex mathematical operations (e.g., multiplication, exponentiation) required by DL models. Current methods, such as simplifying computations through model binarization (e.g., N3IC) or bypassing computations by storing input-output mappings (e.g., BoS), all lead to three key limitations: accuracy degradation (due to precision loss), poor scalability (limited model and input size), and a lack of generality (supporting only specific types of models or operations).

Key idea and contribution :

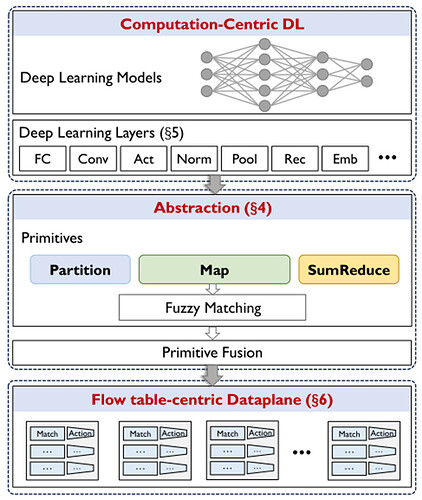

To address the aforementioned issues, the authors built a universal framework named Pegasus. Its core idea is to translate complex DL operations into three fundamental, dataplane-oriented primitives: Partition, Map, and SumReduce. Specifically, Partition “divides” high-dimensional input feature vectors into multiple low-dimensional sub-vectors, making them more suitable for processing on the resource-constrained dataplane. Next, the Map primitive “processes” these low-dimensional vectors in parallel using a technique called Fuzzy Matching. Fuzzy Matching approximates computation results by looking up pre-computed tables, thereby avoiding complex operations like multiplication in hardware. Finally, the SumReduce primitive “combines” the results from the parallel computations to complete the final aggregation.

The main contribution of Pegasus is that it is the first to design and implement a universal IDP framework capable of supporting multiple DL models (including MLP, RNN, CNN, and AutoEncoder) on commercial programmable switches without requiring hardware modifications. To further enhance scalability, Pegasus also introduces Primitive Fusion, a technique that merges consecutive operations into a single table lookup, significantly reducing resource overhead. Concurrently, by using full-precision weights and fixed-point activations, Pegasus largely preserves the model’s original accuracy.

Evaluation

The authors implemented and evaluated Pegasus on a P4-based Tofino 2 programmable switch. The evaluation results show that Pegasus can effectively support various types of DL models on the dataplane, including Multi-Layer Perceptrons (MLP), Recurrent Neural Networks (RNN), Convolutional Neural Networks (CNN), and AutoEncoders. Compared to state-of-the-art methods (like N3IC and BoS), Pegasus achieved significant performance improvements: average accuracy increased by up to 22.8%, supported model size was 248 times larger, and input scale was 212 times larger. This result is significant because it demonstrates that, without relying on special hardware acceleration, complex and large-scale DL models can be deployed and run on existing commercial network devices at line-speed, solely through innovative software abstraction and compilation methods. This paves the way for achieving a truly universal intelligent dataplane.

Q : I have a question. You mentioned that (your solution’s) performance reached 600 times that of a GPU, which is very impressive. I’m wondering, have you ever compared the power consumption of the Tofino switch and the GPU you use? What is the power usage comparison like?

A : We transfer all the data into the GPU memory, then run the GPU at full power, and then we compare their throughput.

Q: Okay, but my question is, are you using a single GPU and a single Tofino switch, is that correct?

A: Yes, we used four—four GPUs—in conjunction with a Tofino 2 switch.

Q: Okay, so have you compared the power consumption between the two?

A: No, we haven’t made a comparison in that regard.

Personal thoughts

In my opinion, the most commendable aspect of this paper is its “primitivization” concept. It cleverly bridges the gap between computation-centric deep learning models and the flow-table-centric dataplane, transforming a hardware mismatch problem into a compilation and abstraction problem—a very elegant approach. The optimization techniques, particularly Fuzzy Matching and Primitive Fusion, not only solve the core computational challenges but also fully consider the limited storage and lookup resources of the dataplane. This reflects the authors’ deep understanding of underlying hardware constraints, making the entire solution highly practical and well-grounded.

However, if there is one area for discussion, I believe the framework’s performance relies to some extent on the effectiveness of the clustering parameters used in Fuzzy Matching, which are learned from training data. This implies that when the distribution of real-world network traffic deviates significantly from the training data (i.e., violating the i.i.d. assumption in machine learning), the model’s accuracy could be affected. An open question worth exploring would be: Is it possible to design an online, adaptive mechanism to dynamically adjust or update the parameters of Fuzzy Matching to cope with dynamic changes in network traffic, thereby further enhancing the system’s robustness and adaptability?