Title: Prudentia: Findings of an Internet Fairness Watchdog

Authors:

Adithya Abraham Philip (Carnegie Mellon University); Rukshani Athapathu(University of California San Diego); Ranysha Ware, Fabian Francis Mkocheko, Alexis Schlomer, Mengrou Shou (Carnegie Mellon University); Zili Meng(HKUST); Srinivasan Seshan, Justine Sherry(Carnegie Mellon University) Schlomer, Mengrou Shou (Carnegie Mellon University); Zili Meng(HKUST); Srinivasan Seshan, Justine Sherry(Carnegie Mellon University)

Introduction

The study explored in “Prudentia: Findings of an Internet Fairness Watchdog” addresses the pressing problem of fairness in bandwidth allocation across various Internet services, highlighting the importance and complexity of managing network traffic in increasingly congested networks. The motivation behind this research lies in the observable discrepancies in service quality when different applications compete for network resources. Existing systems and tools often fail to capture the real-world scenarios of dynamic and heterogeneous network environments, leading to suboptimal resource allocation and compromised service fairness.

Key Idea and Contribution

The core contribution of this paper is the development of the Prudentia platform, an independent and ongoing monitoring system designed to evaluate fairness among live, end-to-end internet services. Prudentia is built to assess the contentiousness and sensitivity of different applications by measuring their performance in controlled testbeds that simulate various network conditions. The key innovation here is the shift from component-specific (like congestion control algorithms) to service-level assessments, providing a holistic view of how design choices across the application stack influence the fairness of bandwidth allocation.

Evaluation

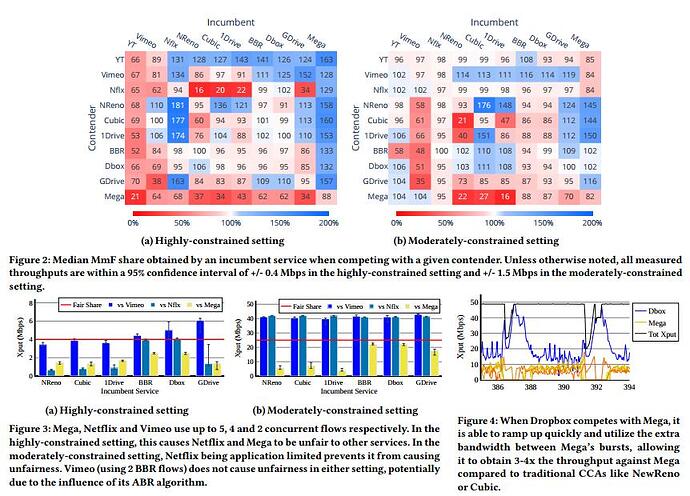

The evaluation of the Prudentia platform demonstrated that services typically experience unfair bandwidth allocations, with some applications deemed as ‘losers’ obtaining significantly less than their fair share of the network resources. The results are significant because they provide empirical evidence for the hypothesis that network fairness cannot be accurately assessed by looking solely at individual components of applications. Instead, a more comprehensive approach that considers entire service behaviors under real-world conditions is necessary. This is crucial for network operators and policymakers as it underlines the need for broader assessments to ensure equitable service delivery across the Internet.

Q1: Does this study assume that applications always do the right thing, or ignore that they might do the wrong thing?

A1: Yes, that’s an excellent point. Often, we design perfect CCAs, but if applications use them incorrectly, you see strange outcomes. Errors can happen in many ways. For example, if YouTube suffers from loss and experiences unfairness compared to competing services, is it the fault of the competitors or is YouTube too sensitive? Therefore, we should test services because users experience the Internet through services, not CCAs.

Q2: In those cases, did you measure the QoE, or only consider throughput? Could it be that the applications’ demand under maximum fairness wasn’t what they wanted, and their QoE was satisfied with less?

A2: Yes, we considered that. For video services, underutilization and unfairness are common due to bitrate ladders. Specifically, Neurinos and Cubic got less than their fair share when competing with Mega. They were using a bulk transfer workload and could fully utilize the link. Mega uses a batching behavior, causing periods of decreased network capacity which Neurino and Cubic can’t ramp up to utilize.

Q3: So this is a congestion control bug, not an unfairness issue?

A3: It’s an application-level congestion control bug. Mega’s multiple flows, if continuously running, wouldn’t have this issue. The problem is Mega’s batch downloading and pauses between batches.

Personal Thoughts

The findings from “Prudentia: Findings of an Internet Fairness Watchdog” are intriguing and highlight a crucial aspect of network management that is often overlooked: the fairness of service-level outcomes in shared networks. The thorough approach to measuring the impact of different network conditions on various types of internet services is particularly commendable. However, the study opens up several questions worth exploring, such as the scalability of the Prudentia platform to more diverse network environments and its adaptability to future network technologies. Additionally, while the platform provides insightful data on service interaction under contention, the dynamic nature of internet traffic and evolving application behaviors suggest that continuous development and updating of such platforms are necessary to keep pace with technological advancements. The exploration of automated, adaptive mechanisms to adjust resource allocation in real-time based on fairness metrics could be a valuable extension to this line of work.