Research Infrastructures and Tools for Reproducible Data Communication Research

Title : Research Infrastructures and Tools for Reproducible Data Communication Research

Host : Georg Carle

Panelists :Georg Carle, Serge Fdida (Sorbonne University), Marie-José Montpetit (SLICES-RI), Sebastian Gallenmüller (TUM), Ethan Katz-Bassett (Columbia University)

Scribe: Hongyu Du (Xiamen University)

Introduction

This session focuses on the future development of scientific research reproducibility and data communication research, pointing out that traditional research has long faced difficulties in experimental continuity, result reuse and credibility, and the trend dominated by large technology companies has exacerbated the disadvantages of the academic community. Experts attending the meeting emphasized that large-scale research infrastructure (such as Slices) and new platforms (such as Peering Testbeds) have strategic value in providing shared resources, supporting cross-border collaboration, and promoting real and controllable experiments. Meanwhile, improving Artifact Evaluation, promoting the integration of industry and academia, opening up data access, and leveraging artificial intelligence to enhance the automation and reproducibility of experiments are regarded as key directions for addressing the reproducibility crisis and driving scientific research innovation.

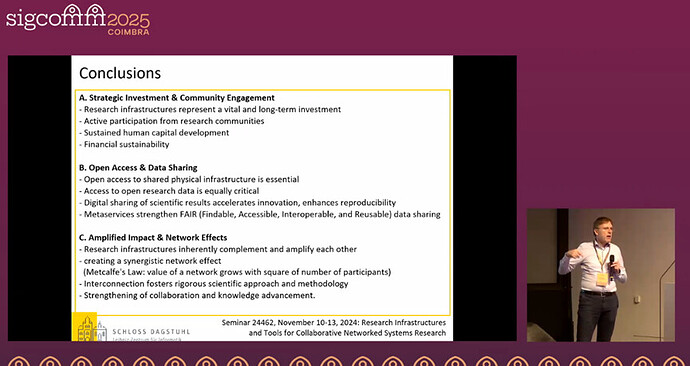

1: Report One

Georg Carle pointed out that traditional research has problems such as poor continuity, difficulty in building on the work of others, and difficulty in combining and utilizing the results. Despite continuous discussions in the community over the years, these issues have not been resolved. Georg Carle emphasized the crucial role of research infrastructure in providing a unified software framework and promoting high-quality reproducible research. Large-scale research infrastructure is an important strategic investment, which not only needs to address the issue of sustainable financial support for equipment and personnel but also promote open sharing and the accumulation of results to facilitate collaboration and network effects. Finally, Georg Carle proposed that the construction of research infrastructure must have clear scientific goals in order to truly create added value and promote the development of communication and computing research.

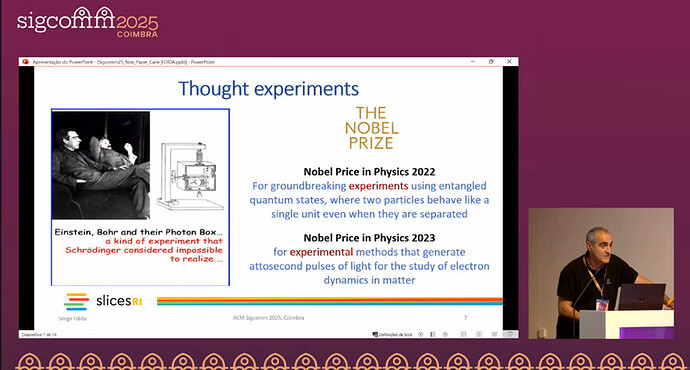

2: Report Two

This report focuses on four core issues in scientific research: Firstly, the reproducibility of research has not shown significant improvement, and the credibility of the results is worrying, which is regarded as a major risk to the scientific community; Secondly, the influence of large technology companies on the academic community is deepening day by day. The proportion of conference papers from big companies has reached as high as 60%. Coupled with the expansion of the number of authors, it has raised questions about academic fairness and the allocation of scientific research resources. Thirdly, the significance of scientific instruments is emphasized. Over 90% of papers rely on them to complete experiments and data collection. As scientific research infrastructure, they support the entire life cycle of research, ensuring quality and reproducibility. Finally, the transformative role of artificial intelligence has been highlighted. AI is profoundly changing science and society, and its development cannot be separated from the open access to data. Overall, Serge Fdida reminds the scientific research community to face up to issues such as reproducibility crises, resource inequality, and data openness, in order to ensure the fairness and sustainable development of scientific research.

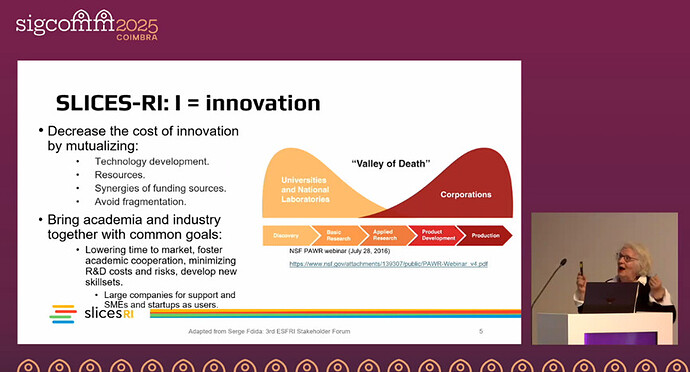

3: Report Three

This report highlights the long-standing gap between the academic and industrial sectors - researchers and industrial technicians act independently, lacking communication and cooperation. Marie-Jose Montpetit pointed out that research infrastructure platforms like Slices can build a bridge between the two. The features of Slices include: project-driven, led by the research community, distributed sharing across 16 countries, virtualization to enhance accessibility, compatibility and scalability, and it is planned to operate for a long time until 2042 to cope with the rapidly evolving technological environment. The speaker emphasized that the future focus lies in advanced infrastructure, digital research tools, and the participation of user communities, and proposed two key directions: the first is blueprints, which is used for automating experiments and simplifying research processes; The second is data and data sets, as the core fuel of the 21st century. At the same time, he emphasized the importance of reproducibility and once again pointed out that Slices RI (Research & Innovation) aims to connect industry and academia and promote the development of scientific research and innovation.

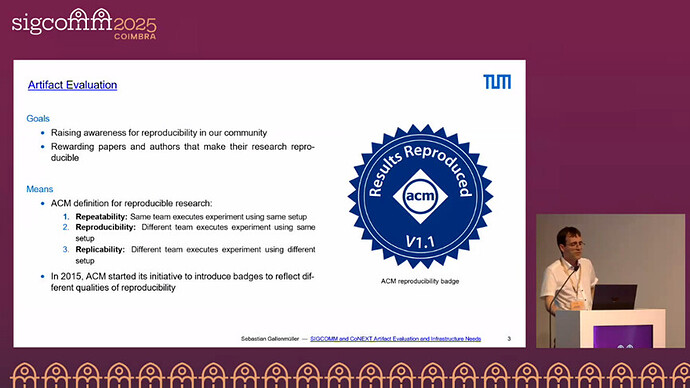

4: Report Four

This report focuses on the reproducibility of scientific research experiments and the Artifact Evaluation mechanism. Sebastian Gallenmuller reviewed the situation since the introduction of experimental reproduction certification at conferences such as SIGCOMM in 2015 and found that the proportion of papers submitting usable, operational or reproducible results has remained stagnant from 2020 to 2024. The reason is that replicating experiments often requires expensive and hard-to-obtain hardware (such as high-priced Gpus, special switches, cpus that support SGX, large-capacity memory, and even high-cost cloud instances), which places a heavy burden on both authors and reviewers. Despite this, participants generally regarded Artifact Evaluation as valuable, but also acknowledged that it was time-consuming and laborious. To improve this process, Sebastian Gallenmuller put forward several suggestions: Draw on the pre-approval mechanism of ACM IMC and conduct review screening before formal reproduction; Relying on a shared testing platform to provide diverse hardware and reduce resource bottlenecks; Promote the use of a unified platform by authors and reviewers to reduce the difficulty of debugging; And support this process through the reproduction framework of infrastructure such as Slices. This year, SIGCOMM has begun to introduce the CloudLab test platform for review use, which is regarded as a step forward in promoting reproducibility.

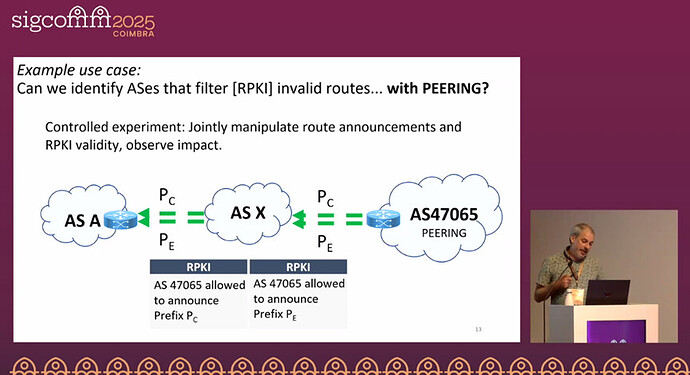

5: Report Five

This report introduces the construction and value of Peering Testbed (Peering Interconnection Testing Platform). This platform originated from doctoral dissertation research and has now expanded and become open to the scientific research community. Peering Testbed has deployed routers at global universities, iXPs, cloud data centers, and Cloudflare nodes, allowing researchers to almost fully control the cross-domain control and data plane of autonomous systems. It can perform BGP route announcements, select neighbor paths, and exchange traffic with Internet destinations. The significance lies in the fact that traditional BGP research tools are limited, making it difficult to truly reproduce security, performance and availability issues. For instance, in the research on route hijacking defense, in the past, only passive observation could be relied on, making it difficult to eliminate interference factors such as load balancing. With the help of Peering Testbed, researchers can proactively control the legitimacy of routing and notification behavior, thereby conducting more precise and controllable experiments and promoting in-depth verification and improvement of BGP security mechanisms.

Talk

The first topic of discussion is that research funding agencies in the United States and Europe are increasingly emphasizing research reproducibility, linking data openness with future funding to promote the standardization of research behavior. Participants believed that binding reproducibility with the success rate of funding could become an effective lever to change the current state of scientific research. At the same time, it is also necessary to consider how to provide incentives for students and young researchers to motivate them to maintain long-term experiments and data accumulation.

The second point is that many online testing platforms have high entry barriers for new users, especially interdisciplinary researchers (such as those in VR/AR, video, sustainable development, and other fields), with high learning costs and complex configurations. The discussion focused on how to reduce the entry difficulty, including providing automated tools, simplifying experimental configurations, adding script examples and permission control mechanisms, so that students and interdisciplinary researchers can conduct experiments more quickly.

The third issue is that the scale and complexity of current scientific research problems are increasing day by day. It is difficult for a single academic institution to reproduce or solve them, and large-scale research infrastructure is needed to simulate industrial-level scale. Infrastructure not only provides experimental capabilities but also verifies the robustness and effectiveness of the plan in real-world environments, thereby enhancing the credibility and operability of academic research.

Personal thoughts

In my personal opinion, this discussion has revealed several profound contradictions and challenges in the scientific research ecosystem: On the one hand, the scale and complexity of scientific research problems are constantly increasing, making it difficult for a single academic institution to independently complete high-quality and reproducible experiments, which highlights the necessity of large-scale research infrastructure and cross-institutional collaboration; On the other hand, the practical pressure of students’ career development and industrial cooperation often makes scientific research influenced by external resources and employment orientation, which may affect academic independence and the sustainability of long-term experiments. In response to these issues, I believe that the future research ecosystem requires a balance in two aspects: First, research infrastructure must be more open, user-friendly and support automation, enabling the academic community, especially young researchers, to quickly enter the experimental environment and conduct reproducible research; Secondly, funding agencies and academic communities should design reasonable incentive mechanisms, incorporating research reproducibility, data sharing and interdisciplinary collaboration into evaluation criteria, so as to maintain academic freedom while promoting the improvement of research quality and innovation capabilities. This balance not only helps narrow the resource gap between academia and industry, but also enables research achievements to be verified on a larger scale and in a more realistic environment, thereby enhancing the credibility and influence of the entire academic community.