Title: Scalable Video Conferencing Using SDN Principles

Author:

Oliver Michel (Princeton University)

Satadal Sengupta (Princeton University)

Hyojoon Kim (University of Virginia)

Ravi Netraval (Princeton University)

Jennifer Rexford (Princeton University)

Scribe: Mingjun Fang(Xiamen University)

Introduction

The Introduction elaborates on the critical role of video conferencing applications (e.g., Zoom and Google Meet) in modern communication and the centrality of SFUs in their architecture. The authors note that SFUs must not only relay media streams among participants but also adapt to time-varying network conditions, yet software implementations suffer from OS-level overheads such as scheduling, context switches, and buffer copying, making it difficult to cope with quadratic load growth and latency-critical paths. Through analysis of real-campus Zoom traces, the authors discern that most SFU operations closely resemble traditional packet-processing tasks like dropping and forwarding, providing a foundation for hardware offloading.

Key idea and contribution

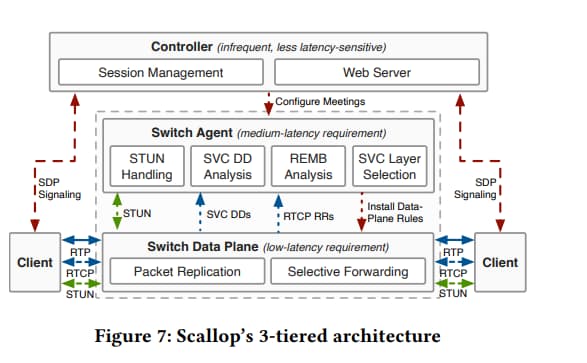

The design section systematically expounds Scallop’s innovative design. Based on analysis of production SFUs and real traffic traces, the discovery that media signal forwarding and adaptation closely resemble traditional packet-processing tasks leads to a hardware/software co-design approach that decouples SFUs into an efficient data plane and two-tier software control plane. This decoupling introduces three challenges: maintaining application semantics while separating functions, implementing media replication and forwarding tasks in hardware, and ensuring compatibility with WebRTC standards. Detailed solutions include a three-tier architecture design, platform-optimized implementations, and transparent proxy mechanisms. Scallop decouples SFU functions into three tiers based on operational frequency and latency requirements: session management and other infrequent and non-real-time tasks are handled by a centralized controller; medium-frequency tasks such as bandwidth estimation and feedback processing are managed by a switch agent deployed on the hardware device, forming a low-latency control loop; while the most frequent and latency-sensitive operations—media packet replication, forwarding, and dropping—are entirely offloaded to the hardware data plane, thereby achieving optimal performance and scalability.

Evaluation

Evaluation section validates system performance through carefully designed experiments. Deploying the data plane on a 12.8 Tbit/s Intel Tofino2 hardware switch with the switch agent running on the switch’s CPU and controller on a separate server, control plane analysis shows 96.46% of packets and 99.65% of bytes processed in the data plane with only 3.54% requiring software processing. Latency tests demonstrate 26.8x reduction in median latency and 8.5x reduction in 99th percentile latency. Rate adaptation experiments confirm maintained quality even under packet loss conditions. Scalability simulations show support for up to 128,000 concurrent meetings, representing 7-422x improvement over software solutions.

Q&A

Q1:

Well, I’m bit confused with motivation because SDN is like interdomain network technology and SFU is an application level construct, right? So why do you need to go for SDN and implemented in programmable switches? I’m not really kind of buying the motivation. Why would you do that? … But let me another way to ask the same question is user application zoom, they don’t have as is our network infrastructure, right? So they attach their servers to existing networks, right? So, you know, those application layer creators, they do really have network infrastructure to produce programmable switches to implement this functionality. Right?

A1:

That’s a great question. So zoom uses cloud services to get their work done. And our idea essentially is that in the cloud itself, there are switches that are being used, right? So if the cloud providers switch to a model like this for video conferencing traffic, then application operators like zoom can actually leverage that and use the cloud services still, but the work has to be shifted from the software within the cloud to the actual data center switches that are already present there.

Q 2 :

Thank you for a very nice talk. I think like if you offload this to the blue field, so the blue field can do like this minimal function to, I mean, replicate and maybe drop packets. Did you think about a co-design of the data plane with the host where the host can do then more elaborate function like transcode to different video codex or do the maybe the layer adaptation or this kind of things.

A 2 :

Yeah, so that’s a great question. Thank you. There are two thoughts here. One is that we have been in touch with Nvidia, and one of the things we find is that they are in the process of enabling bulk application. So right now, when, at the time of writing this paper, that was not available whether it’s going to come. So maybe there’s an opportunity there directly in the hardware of the smart name. Secondly, as you said, there is a possibility that we offload the, like the control plane to the host and do the rest of the work in the sort of arm course in Nvidia, sorry, in the smart neck… So we should have a number sometime soon where we don’t have it yet, essentially.

Personal thoughts

The Scallop paper presents an innovative redesign of video conferencing systems based on SDN principles, offloading high-frequency, low-latency media packet processing to programmable hardware while retaining control functions like session management and rate adaptation in software. This design is not only conceptually clear but also validated across multiple platforms, demonstrating significant performance gains. However, its strong reliance on specific hardware capabilities (e.g., Tofino’s packet replication engine and BlueField-3’s reparse feature) may limit portability and deployment in heterogeneous environments. Additionally, end-to-end encryption is not fully addressed, and the proposed key management approaches require further practical evaluation. The sequence number rewriting heuristic, though efficient in hardware, warrants more testing under extreme network conditions such as high packet loss or reordering. Overall, Scallop outlines a promising architecture for hardware-accelerated video conferencing, though issues around cross-platform compatibility, security, and robustness under challenging network conditions remain to be further explored.