Title: Triton: A Flexible Hardware Offloading Architecture for Accelerating Apsara vSwitch in Alibaba Cloud

Authors:

Xing Li (Zhejiang University and Alibaba Cloud); Xiaochong Jiang (Zhejiang University); Ye Yang (Alibaba Cloud); Lilong Chen (Zhejiang University); Yi Wang, Chao Wang, Chao Xu, Yilong Lv, Bowen Yang, Taotao Wu, Haifeng Gao, Zikang Chen, Yisong Qiao, Hongwei Ding, Yijian Dong, Hang Yang, Jianming Song, Jianyuan Lu, Pengyu Zhang (Alibaba Cloud); Chengkun Wei, Zihui Zhang, Wenzhi Chen, Qinming He (Zhejiang University); Shunmin Zhu (Tsinghua University and Alibaba Cloud)

Scribe: Yao Wang (Xiamen University)

Introduction

The paper addresses the challenge of accelerating the Apsara vSwitch (AVS) in Alibaba Cloud to meet increasing performance demands. AVS is a critical component providing connectivity for various instances like VMs and containers. Existing solutions, such as the “Sep-path” offloading architecture, fall short due to unpredictable performance and low iteration velocity, highlighting the need for a more efficient and flexible system.

Key idea and contribution

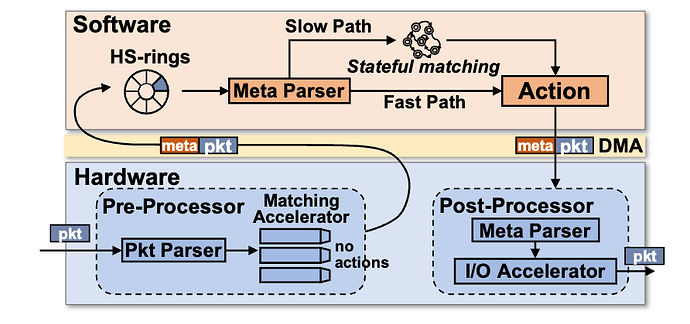

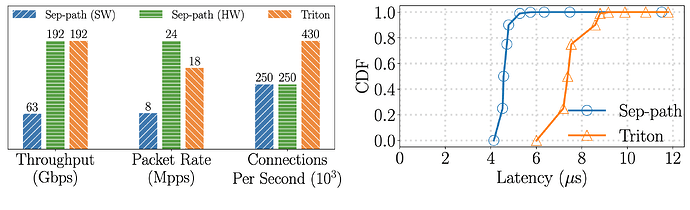

The authors propose Triton, a flexible hardware offloading architecture designed to enhance AVS performance. Unlike the “Sep-path” architecture, Triton employs a unified data path, where packets are processed serially through both software and hardware, ensuring predictable performance. Triton features an elegant workload distribution model that offloads generic tasks to hardware while maintaining dynamic logic in software. It also integrates cutting-edge software-hardware co-designs, such as vector packet processing and header-payload slicing, to alleviate software bottlenecks and improve forwarding efficiency. The deployment of Triton in Alibaba Cloud demonstrates significant improvements in bandwidth, packet rate, and connection establishment rate.

Evaluation

The evaluation of Triton shows that it achieves predictable high bandwidth and packet rate, significantly improving the connection establishment rate by 72% with only a 2μs increase in latency. This result is significant because it demonstrates that Triton not only matches but also surpasses the performance of previous solutions, offering predictable performance and faster iteration velocity. The real-world deployment results suggest that Triton can effectively enhance the performance and flexibility of cloud infrastructure, making it a valuable solution for cloud vendors.

Q:

Where are the crypto operations in Triton?

A:

It should be placed in the pre-processor or post-processor. Services should be logic-agnostic, meaning that changes to services should not change the logic of the software layer.

Personal thoughts

I find Triton’s approach to unifying software and hardware data paths particularly innovative. Especially eye-catching are designs that optimise performance, including but not limited to HS-rings, vector packet processing. However, the paper could have delved deeper into the potential trade-offs of this unified approach, such as the complexity of managing a single pipeline. Additionally, it would be interesting to explore how Triton handles varying workload patterns over time and its adaptability to future network demands.