Title : Zoom2Net: Constrained Network Telemetry Imputation

Authors : Fengchen Gong, Divya Raghunathan, Aarti Gupta, Maria Apostolaki (Princeton University)

Scribe : Xing Fang (Xiamen University)

Introduction

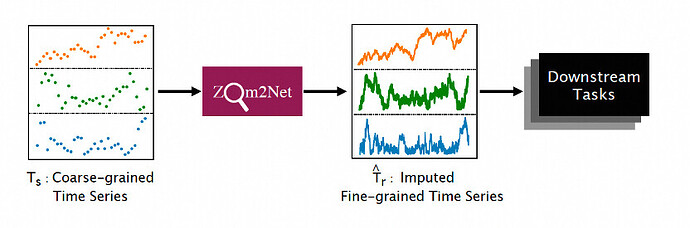

Zoom2Net addresses a crucial challenge in network monitoring by combining formal methods and machine learning to refine coarse-grained telemetry data into fine-grained insights. Existing technologies, like traffic mirroring and eBPF, often fall short due to limitations in data granularity, leaving network administrators with imprecise information for debugging, optimization, and fault localization. Zoom2Net aims to improve the accuracy of network analysis by reconstructing detailed network metrics from broad measurements.

Key Idea and Contribution

The main challenges in reconstructing fine-grained data from coarse-grained data include: a single coarse-grained time series can correspond to multiple fine-grained time series; even with multidimensional data, coarse-grained time series can still correspond to multiple fine-grained outcomes; and machine learning techniques cannot guarantee logical correctness of the results

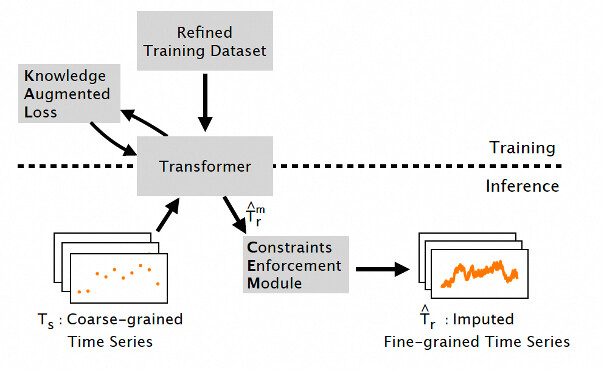

Zoom2Net integrates three main components to enhance data accuracy: the Refinement Dataset, Knowledge Augmented Loss (KAL), and the Constraint Enforcement Module (CEM). The Refinement Dataset addresses the challenge of one-to-many mappings between coarse and fine-grained data by optimizing the training dataset. KAL incorporates domain knowledge into the loss function, ensuring that outputs align with network principles. CEM applies network constraints to refine results, modeled as a linear integer programming problem and solved with Gurobi. This approach collectively improves the precision of fine-grained data reconstruction.

Evaluation

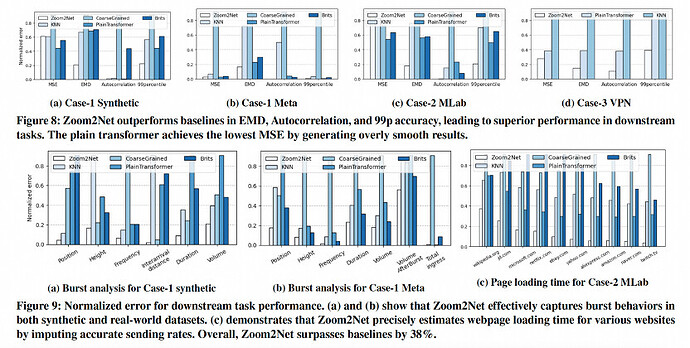

Zoom2Net’s effectiveness was demonstrated through comparisons with advanced techniques like KNN, transformers, and BRITS, using both synthetic and real data. It achieved an average improvement of 38% in the accuracy of downstream tasks. This is significant because it shows that Zoom2Net can substantially enhance network analysis precision, addressing a major gap in current monitoring systems. By blending formal methods with machine learning, Zoom2Net sets a new standard for reliable network telemetry.

Q&A

Q1: How regular can the original data be with larger sample intervals, and when does this break down?

A1: It depends on the data’s frequency of change. Frequently changing data requires more frequent sampling. The paper includes a module that shows the model’s certainty in its imputed results, helping to determine if the coarse data is sufficient.

Q2: How do you inform users about the confidence in the results, especially in case of ambiguity?

A2: We use an uncertainty model to indicate confidence levels. Additionally, we have a mechanism to identify collision instances, which are cases with similar inputs but different outputs. These instances should be a small subset of the training data. If there are too many, it indicates a problem.

Q3: Have you considered applying your methods to non-time series data or other abstractions in the future?

A3: Yes, we are considering whether it is possible to impute events from coarse measurements. This could be a direction for future work, beyond just imputing time series data.

Q4: How do you handle scenarios where not all signals can be collected, How fine-grained can the data be?

A4: it is possible that we will not be able to collect the data, but not often happen.

Q5: Your evaluation seemed to focus on coarse-grained data. Shouldn’t it check how well you are preparing the fine-grained data?

A5: The goal is to show that the imputed fine-grained data performs better in management tasks than the original coarse data. We use an iterative imputer to expand the coarse data into fine-grained time series and compare these results with the actual fine-grained data.

Personal Thoughts

The paper combines formal methods and domain knowledge to enhance the accuracy of machine learning model outputs. It appears that modeling domain knowledge constraints into the loss function is still akin to fine-tuning techniques within the machine learning domain. Additionally, the Constraint Enforcement Module (CEM) directly adjusts the final outputs based on the constraints. The paper lacks a comparative analysis between the adjusted results and the actual results. Specifically, it does not analyze whether the adjusted results, after enforcing the constraints, are sufficiently close to the actual results, which would help to assess the accuracy improvements brought by CEM and KAL separately. Overall, Zoom2Net is a novel work that effectively enhances accuracy by applying formal methods to machine learning. It is anticipated that the integration of these two techniques can be even more tightly and deeply combined in the future.